Multi-modal IX

Multi-modal & Natural Interactions

Mercedes-Benz R&D North America

Concepts were implemented in 2019 Mercedes-Benz GLE under the name of “Interior Assistant”.

Scope

Collaboration

UX Software Engineer, UX Researcher, Systems Engineer, Mechanical Design Engineer

Use Cases, UX principles and interaction concept

A vision of how gesture interaction should work in a vehicle was created. Based on the vision, short-term and long-term use cases and interaction concepts were derived for implementation in upcoming car generations.

Challenge

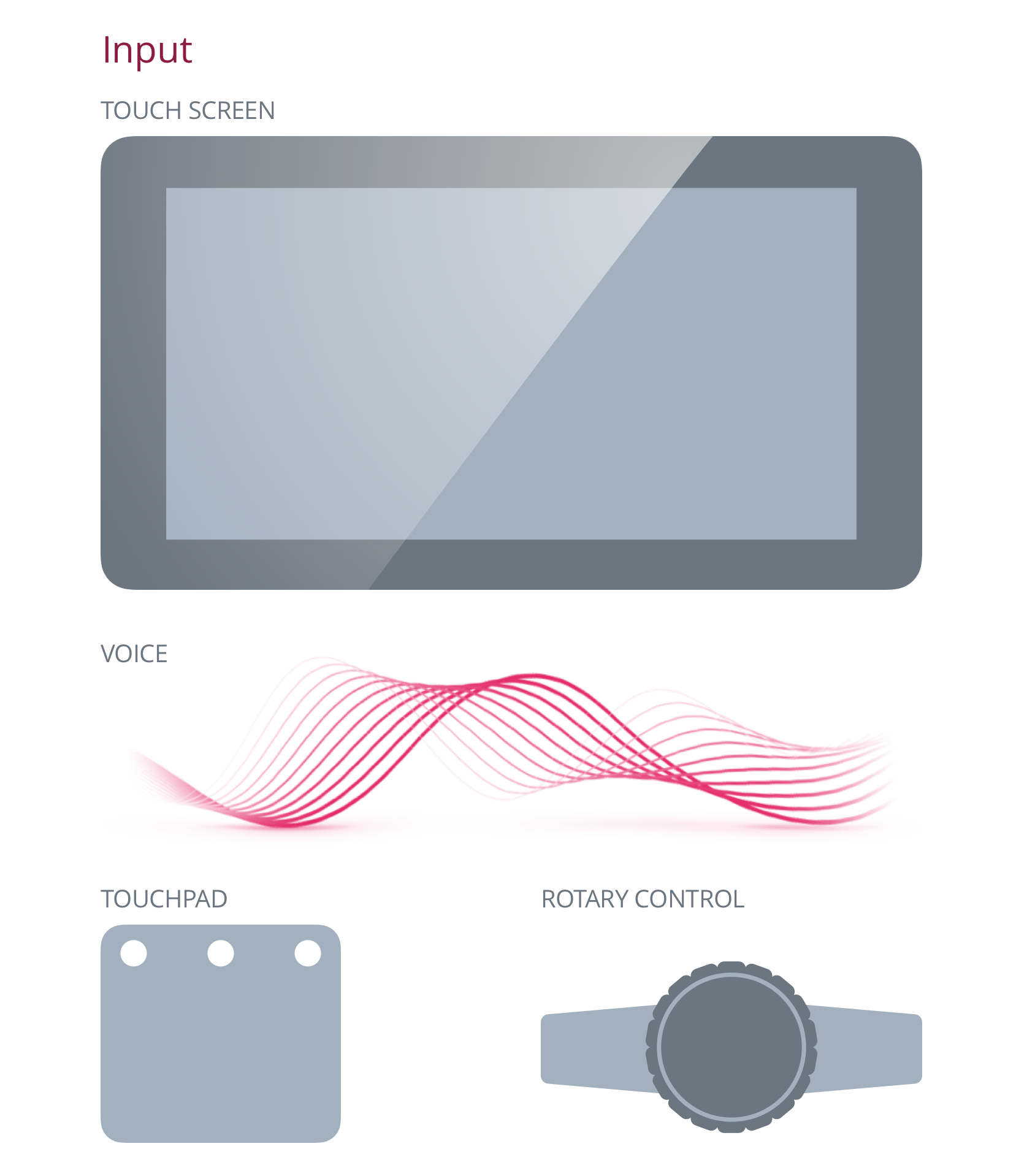

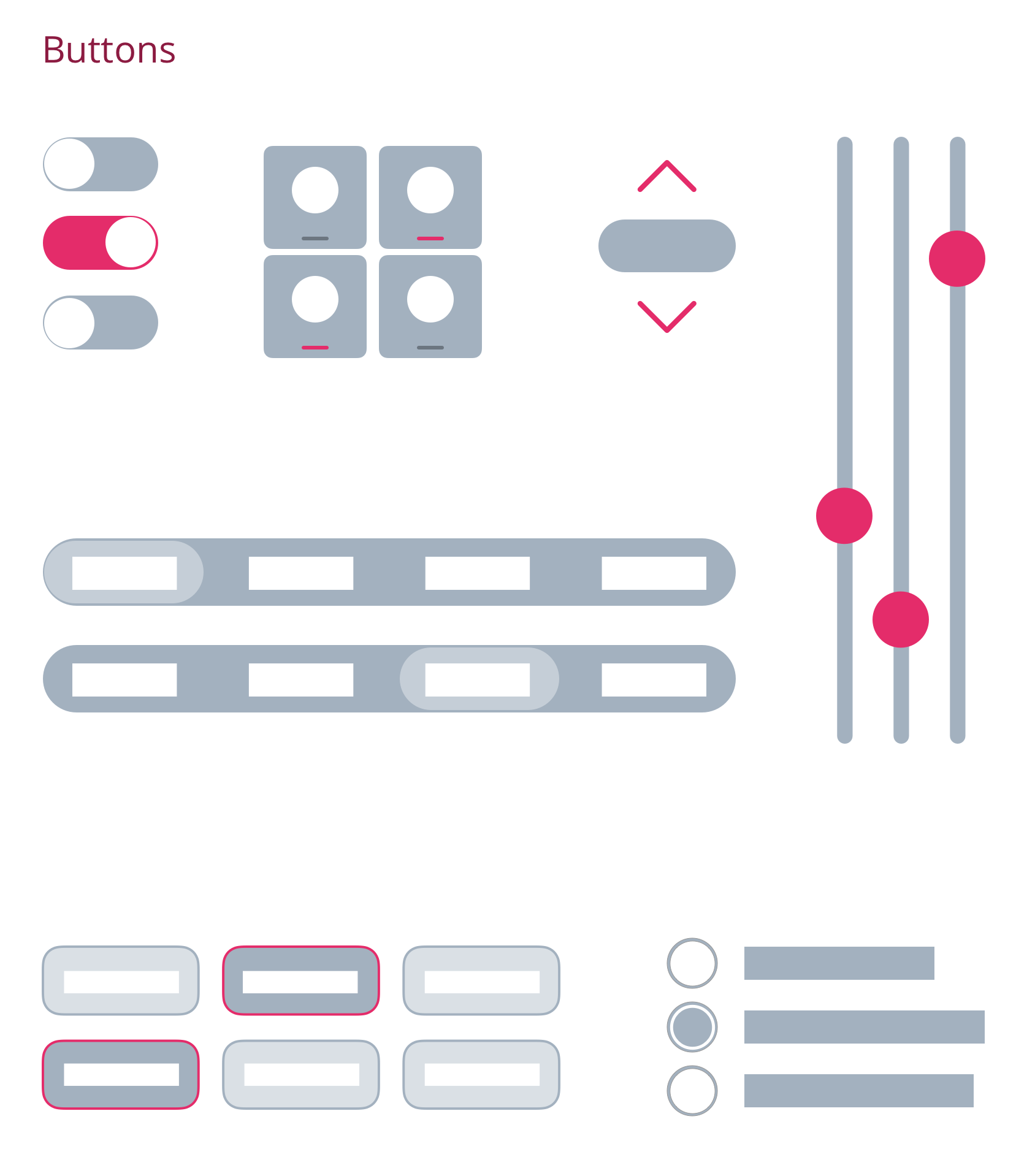

Currently, interaction with any digital system in the car is constrained to the use of a certain set of buttons, screens and controls. There's a lot of benefit in using physical controls, but as there are an increasing number of features in the car there's also a push to digitize more and more of these buttons for use in touch screens. The iconography is becoming cryptic and button placement relative to the feature they control often isn’t instantly clear to the user. This eventually slows down interaction as the learning curve increases along with cognitive load.

Opportunity

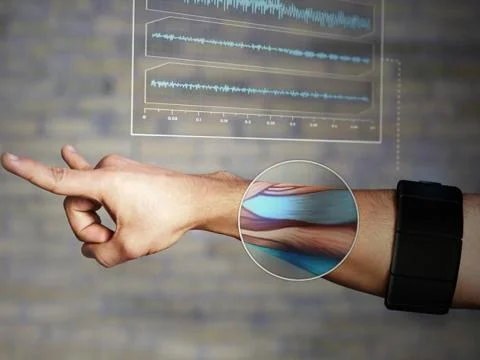

Utilizing multiple sources as input modalities creates the opportunity to provide more intuitive interactions. By knowing which gestures (meaning explicit and implicit hand, body, eye or head postures) people are using in their cars we can provide a more intuitive interface that adapts to them, instead of the other way around.

Research

User research was conducted at several times throughout the project: In the beginning to explore if there's a "natural" gesture language, and formative throughout concept development for feedback on usefulness and usability

There's no universal gesture language

One important insight was that there's no such thing as a gesture language for interaction with things that people share. Mental modals of how to control certain things or even how to perform specific gestures are vastly divergent across different people.

Different hand postures and movements people perform when a) being asked to "tap", and b) being asked how they would adjust a side mirror with gestures.

Other gesture systems

Looking at other gestural interfaces: no gesture language was really successful so far. Why? It's not something people do naturally. From the very beginning humans learn to touch things to interact with them.

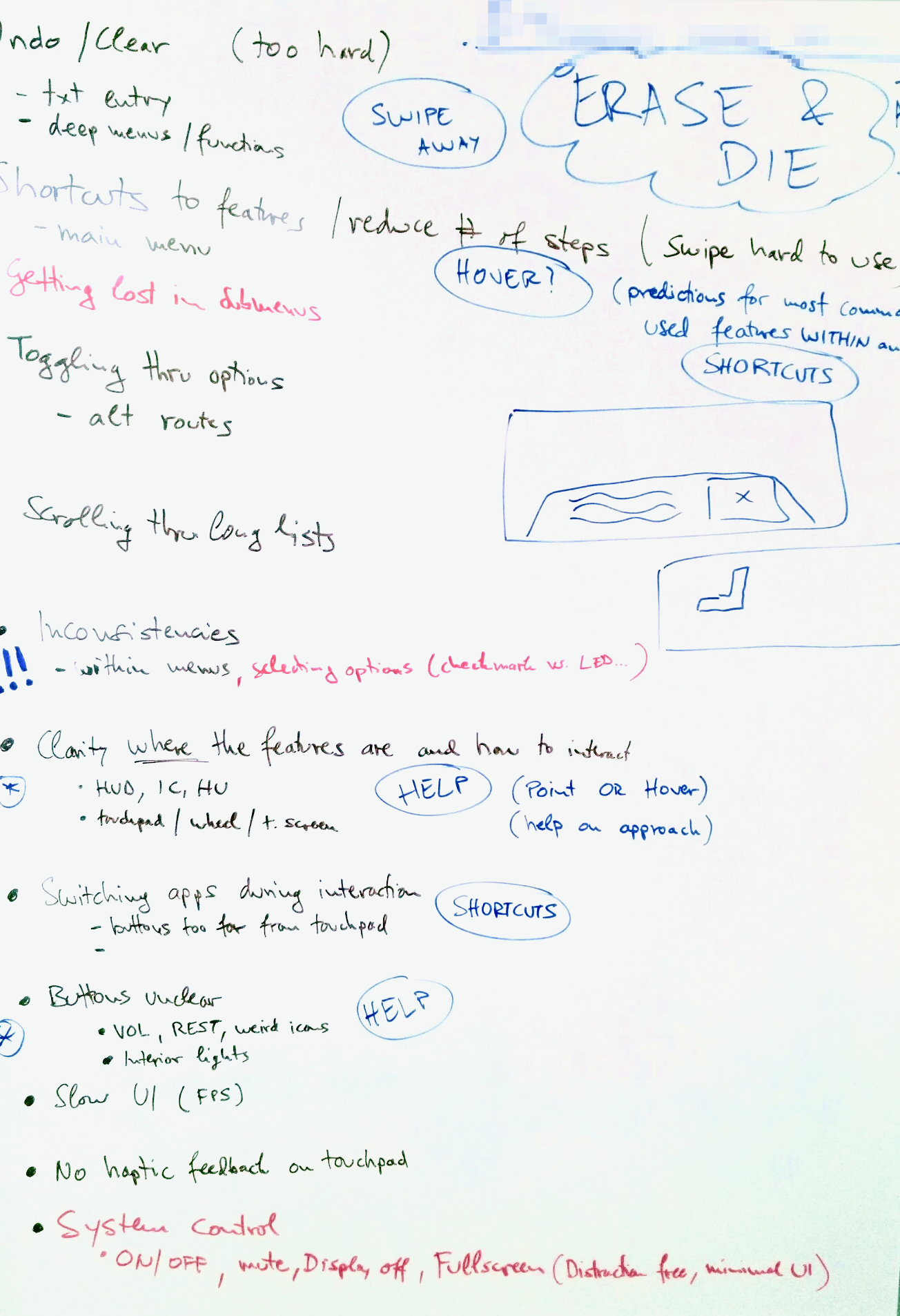

Pain Points

We didn't want to build a gesture interface just for technology sake. That's why we started looking into current frustrations people experience using our and competitor cars. We were able to quickly identify a good amount of pain points with current systems.

We started investigating if we could mitigate some of these pain points by knowing what people are doing in their cars.

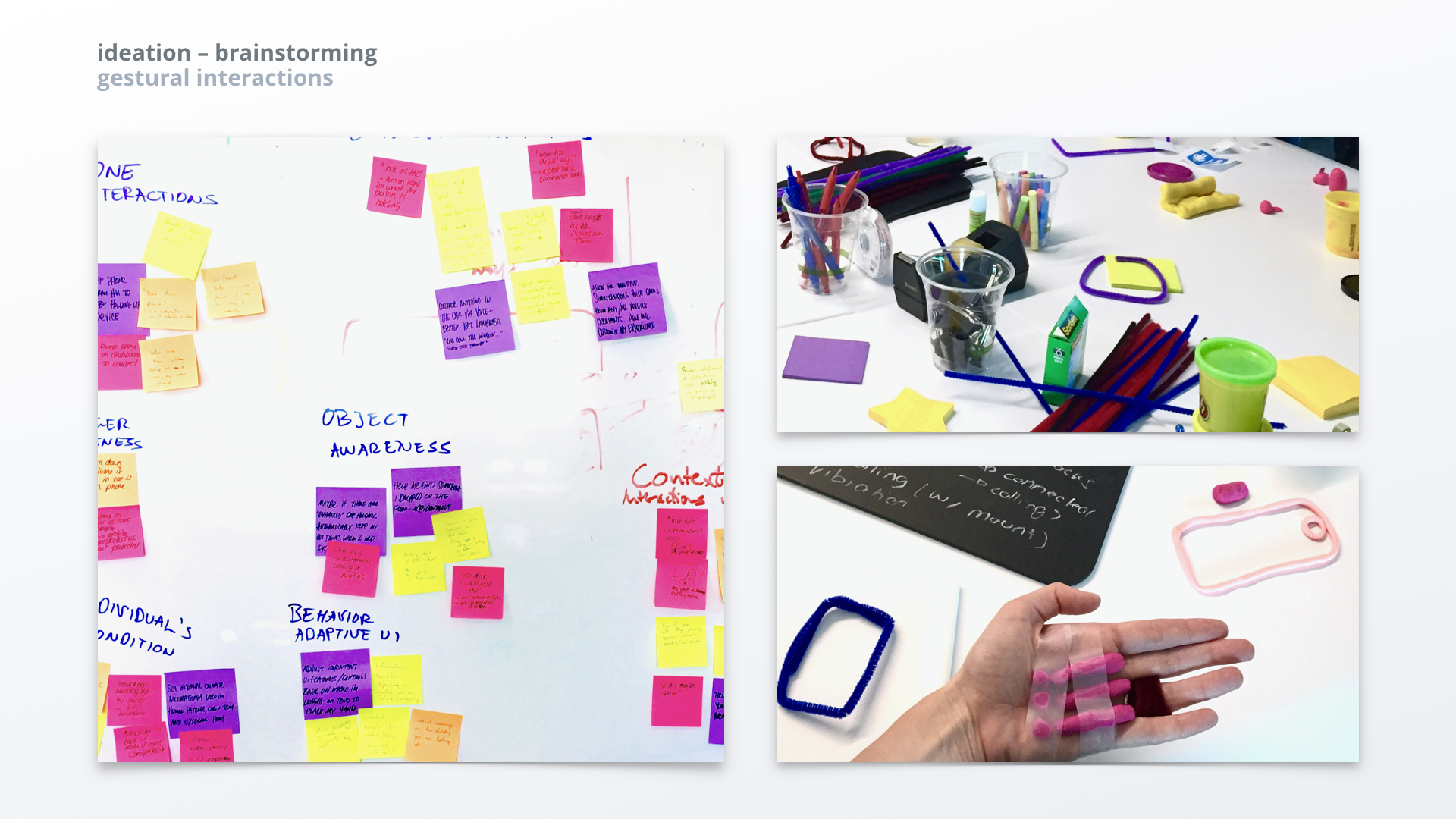

Ideation

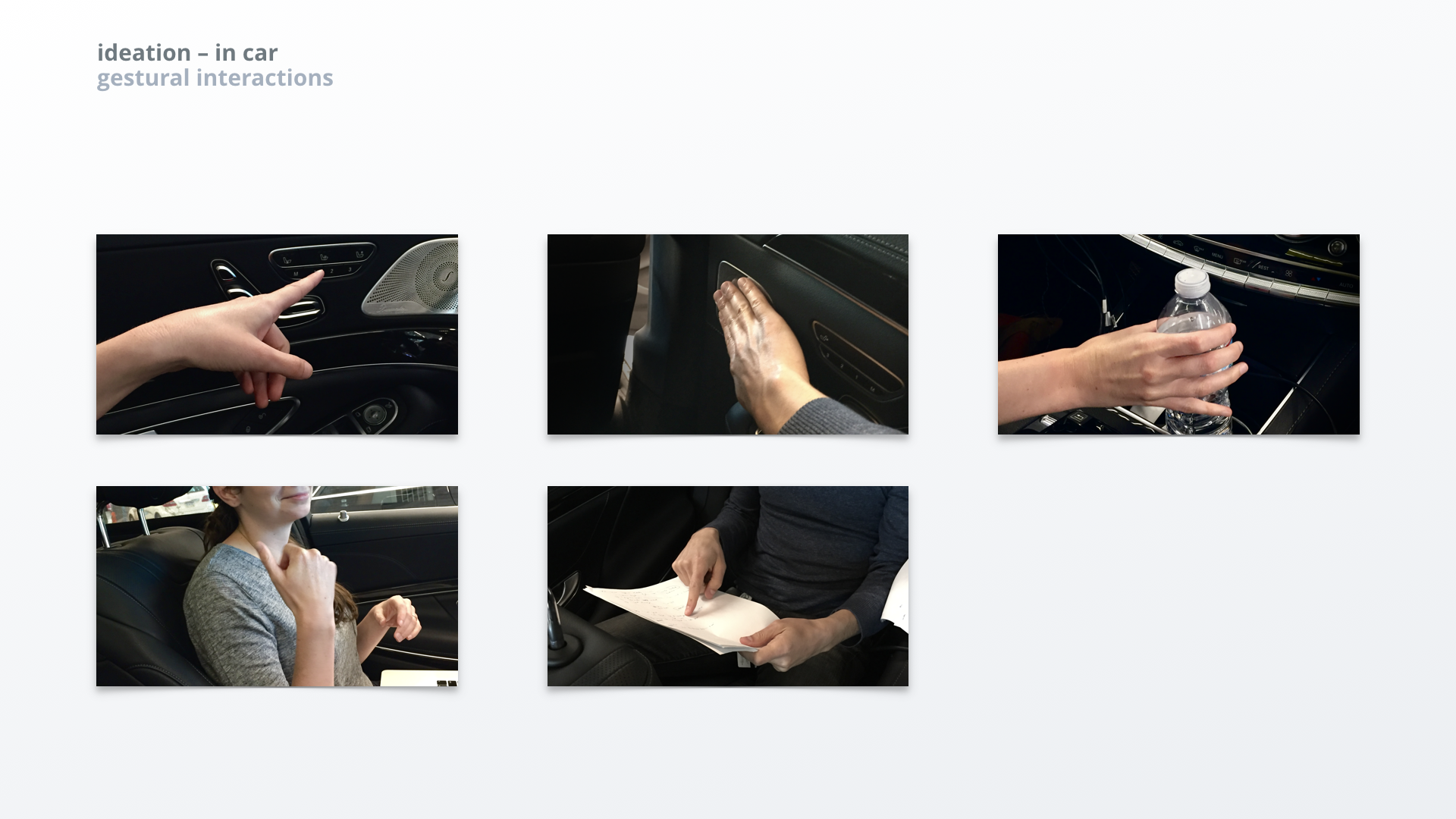

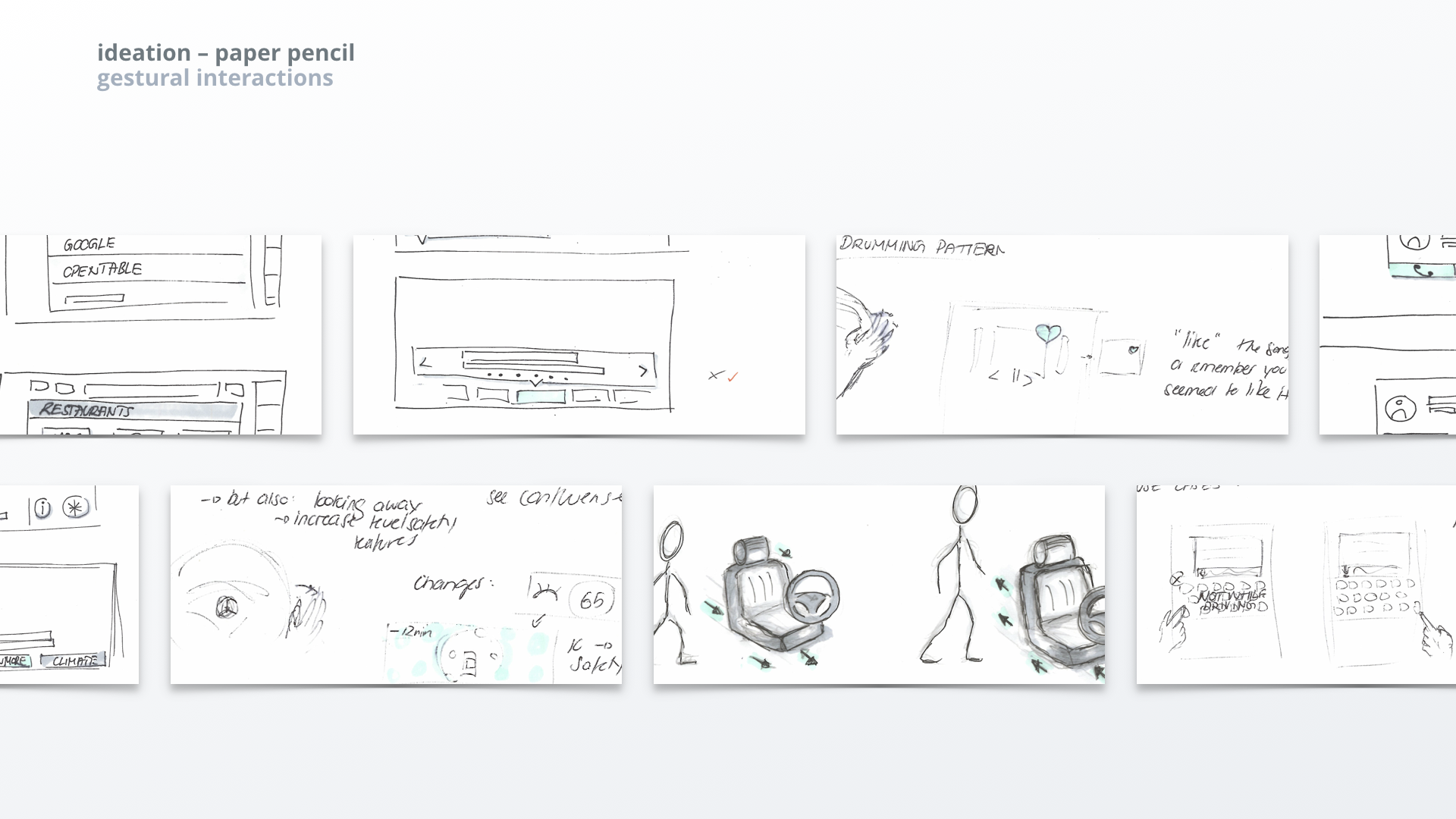

Most ideation happened in a prototyping car - this helped us think about how to address the pain points and what users would naturally do in their car. It also allowed us to quickly try out our ideas and see how it would feel and what kind of feedback might be required.

Decision point. Driven by our research observations that people don't share a common gesture language or concept, and by looking at user's pain points and brainstorming on how to mitigate them we decided to focus on implicit gestures instead of explicit ones. This means we wanted to focus on what kind of motion people perform naturally in the car (e.g. touching things) and enrich those interactions instead of having our users learn abstract gestures to control things mid-air. The following design principles and main themes resulted from this decision.

Design Principles

Main Themes

Looking at the pain points we identified, as well as use case and interaction ideas generated to overcome these, six major themes emerged. These themes are pointing to how gestural interactions can be used to support users and add a benefit - without them having to learn a separate gesture language.

automatic adjustments

intelligently adapt to people's actions and needs

tangible shortcuts

enrich interaction with existing interior elements

contextual help

no manual needed

contextual voice interactions

with the interior and the environment

seamless device integration

let people make use of the device they use most of the day

interior safety & awareness

have eyes inside the vehicle

Concepts

Our interaction concepts focused on implicit interactions: observe what people already do naturally in the car and try to enrich or improve that experience.

INTELLIGENT LIGHT

When people are driving at night and alone, and reach over to the passenger seat, or behind to the rear, they will be supported by an automatic and targeted light that will come on. The light will help the user finding what they're looking for faster and therefore reducing driver distraction. Furthermore, it's a very attentive gesture of the car, helping building a positive relationship between driver and car.

What Was Delivered

The outcome of these activities were use cases, the movement (or gesture) that people would perform in these use cases, as well as graphical user interface concepts. Furthermore, an interaction concept was developed for how people could discover those new interactions.

The deliverables for this project were

proof of concept prototype (gestural interaction & UI)

collection of short-term and longer-term use cases

design principles

prototype and vision videos

user research feedback

These deliverables were handed over to our stakeholders and teams that were responsible for bringing these concepts to series production.